Mask and Reason: Pre-Training Knowledge Graph Transformers for Complex Logical Queries

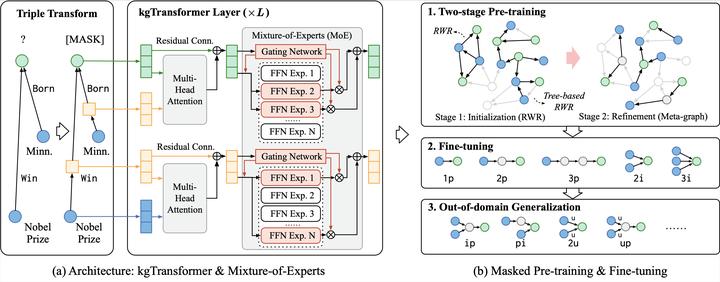

(a) kgTransformer with Mixture-of-Experts is a high-capacity architecture that can capture EPFO queries with exponential complexity. (b) Two-stage pre-training trades off general knowledge and task-specific sparse property. Together with fine-tuning, kgTransformer can achieve better in-domain performance and out-of-domain generalization.

(a) kgTransformer with Mixture-of-Experts is a high-capacity architecture that can capture EPFO queries with exponential complexity. (b) Two-stage pre-training trades off general knowledge and task-specific sparse property. Together with fine-tuning, kgTransformer can achieve better in-domain performance and out-of-domain generalization.Abstract

Knowledge graph (KG) embeddings have been a mainstream approach for reasoning over incomplete KGs. However, limited by their inherently shallow and static architectures, they can hardly deal with the rising focus on complex logical queries, which comprise logical operators, imputed edges, multiple source entities, and unknown intermediate entities. In this work, we present the Knowledge Graph Transformer (kgTransformer) with masked pre-training and fine-tuning strategies. We design a KG triple transformation method to enable Transformer to handle KGs, which is further strengthened by the Mixture-of-Experts (MoE) sparse activation. We then formulate the complex logical queries as masked prediction and introduce a two-stage masked pre-training strategy to improve transferability and generalizability. Extensive experiments on two benchmarks demonstrate that kgTransformer can consistently outperform both KG embedding-based baselines and advanced encoders on nine in-domain and out-of-domain reasoning tasks. Additionally, kgTransformer can reason with explainability via providing the full reasoning paths to interpret given answers.